Created by Yasin Dagasan, Harvey Nguyen and Mark Grujic

This blog is a republication of an accepted abstract published in the Australian Exploration Geoscience Conference (AEGC) 2021 conference proceedings.

Summary

The application of computer-vision technologies to geological datasets has previously been shown to objectively verify existing datasets (e.g., automated calculation of geotechnical parameters) as well as the creation of new datasets (e.g., fracture orientation data for an entire deposit).

When visually inspecting core, a geologist will infer geological features that are masked by artefacts like hand-drawn mark up; a vein is not intersected by an orientation line and the geologist knows this. Similarly, physical inspection of the core means that interpretation is not affected by artefacts that can manifest in photography, including reflections from non-diffuse lighting in the core shed. A computer vision model does not initially know the relevance of these non-geological artefacts and must be trained accordingly.

We introduce the application of neural network architectures to identify and infer geological information that is hidden by non-geological artefacts in core photos, including a) core markup such as orientation or cut lines and handwriting, and b) lighting effects including the reflection in wet photos taken because of the use of non-diffuse light sources.

The process begins with a deep convolutional neural network instance segmentation model that is trained to identify cohesive rock within core photos associated with the desired artefacts to be masked. Following this, a second convolutional neural network that can infer geologically reasonable textures in place of the irregularly shaped masked artefacts is trained, using partial convolutions that are conditioned on valid pixels. Results show that models trained in this manner can be used to improve quantified image-based knowledge, including assessing similarity of rock texture.

Introduction

Geological logging of drill core requires a significant amount of time and human resources. The logging tasks are prone to subjectivity; data logged by different geologists may exhibit variable quality and consistency (Hill et al, 2020). Tasks involved in manually logging are rather repetitive and suitable for automated logging and interpretation. The use of machine learning methods to automate such repetitive and subjective tasks to achieve consistent logging and rapid data processing is not new (Dubois et al, 2007). Data involved in logging is available in numerous formats. Of the available formats, logging from core imagery is convenient and timely, performed at the rig or in the core shed. This paper introduces a means of extracting geological and geotechnical information from core photography, using Computer Vision (CV) models, and addresses several associated challenges.

When logging core, geoscientists seek to classify rock into geological domains These include lithology and alteration domains, and identifying geological features including veins and other textures. The human visual system is remarkably adept at inferring information from obfuscated scenes (Motoyoshi, 1999). In the geological setting, with appropriate geological training, those logging core understand concepts including that veins are not bisected by orientation lines, or that metre-marks do not influence geoscientific understanding of rock character.

When attempting to replicate the way that the human visual system interprets core using CV models, one challenge is that the models are easily biased by geological features that are masked by non-geological artifacts present on the core, errors that result from this are called ‘adversarial examples’ when approached from a CV standpoint. Such artifacts can include core markup such as orientation or cut lines, annotated handwriting, mechanical/drillers breaks marks, and lighting effects including shine in photos taken using non-diffuse light sources.

With a large enough model training dataset, it is possible to minimise the influence of the non-geological artefacts; for example, a model can be sufficiently robust against non-geological artefacts if it has been trained with enough examples of a particular lithology with and without orientation lines, and with and without other markup. However, in production Machine Learning (ML) environments, and in the interest of optimising human resources, we show how sparsely labelled CV models using neural network architectures can result in greater model accuracy, by training the model to infer geological information that is masked by artefacts in core photos. First, a deep convolutional neural network instance segmentation model is trained to identify cohesive zones within core photos that are associated with the desired artefacts to be masked. Following this, a convolutional neural network that can infer geologically reasonable textures in place of the irregularly shaped masked artefacts is trained, using partial convolutions that are conditioned on valid pixels.

Method and results

Geological similarity

To demonstrate the benefit of CV models that infer obfuscated geological information, we will compare the results of identification of similar rock characters, with and without applying the described methodology. To do this, we divide analysis-ready core photography into square tiles and assess the geological and non-geological content of similar tiles before and after implementation of the described models.

Analysis-ready imagery is fundamental to any CV modelling of core photography. This means that photo de-warping, identification of coherent and incoherent rock within rows, understanding compaction, core-loss and metadata entry errors, and optical character recognition of depth annotations must be considered. In this paper, we utilise the commercially available Datarock Core product to produce analysis-ready core photography. An example of this is shown in Figure 1.

Figure 1. An example of a depth registered core row.

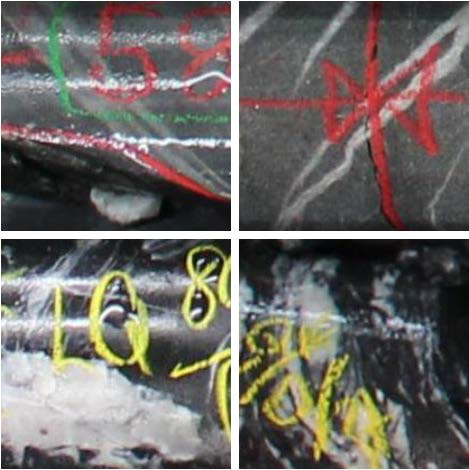

Figure 2 shows examples of core image tiles with degrees of geological obfuscation. Artefacts including writing on the core may have a negative impact on the performance of machine learning models.

Figure 2. Square tiles derived from cropping the row export of the core imagery.

Mask identification

Before inferring geological information, accurate artefact masks must first be identified. To achieve this, a training database was built to identify pixels belonging to artefacts. 177 image tiles were manually labelled to train a convolutional neural network mask model. The training was selected to sample the variety of obfuscations. Training data includes that seen in Figure 3.

Figure 3. Example mask model training data. Left: the raw image. Right: the manually labelled artefact mask.

The convolutional neural network used to train the mask model was based on the Resnet34 (He et al, 2016) model. To avoid overfitting and achieve a more generalised model, several data augmentations are used in the model training. These augmentations include rotation, lighting, and warping. One cycle policy (Smith et al, 2019) was used to train the models in 4 stages totalling 200 epochs. We then apply post-processing techniques including dilation and erosion to simplify the model masks. The resulting segmentation model performance is demonstrated in Figure 4.

Geological inference

The geological character that fills these irregular masks are then predicted using a neural network model, based on partial convolutions. In computer vision literature, the process of imputing missing information from imagery is known as inpainting. Lui et al (2018) show the advantages of inpainting using partial convolutions over traditional statistical methods which have no context of visual semantics. Equally, in the context of inpainting, partial convolutions are shown to be superior to existing deep neural network models including U-Net (Ulyanova et al, 2017); fully convolutional networks make prior assumptions about the value within the masked regions, introducing artificial edges and lack of realistic modelled texture.

The partial convolutional inpainting method was implemented in the PyTorch (Paszke et al, 2019) framework. Inference of geological data on some example tiles are seen in Figure 4.

Figure 4. Left: raw image. Middle: mask predicted by the segmentation model. Right: results of inpainting.

Model effects on image similarity search

Image similarity search is an important task in building models using geological imagery, including core. Given a query image with certain geological features in it (i.e., veins, grains, fractures), we are often interested in finding similar occurrences elsewhere in the deposit or image library. This can help build orebody knowledge and guide exploration activity. To facilitate such a search, we use a pretrained ResNet50 model to extract numeric, descriptive image features from each square tile. This allows to achieve dimensionality reduction using pre-trained convolutional filters that are activated based on certain features existing on given imagery. Grujic et al (2019) demonstrate this methodology applied to regional geophysical datasets.

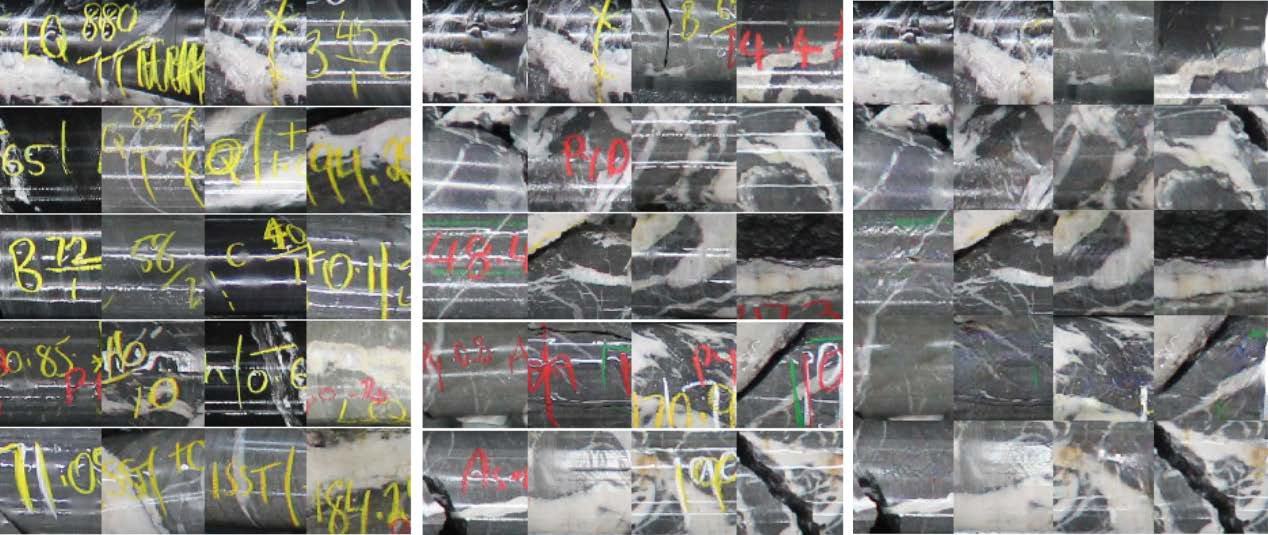

To perform an image similarity search, an extracted feature of a queried image is obtained, and k most similar images are retrieved using the k-nearest-neighbours algorithm, using a cosine similarity distance metric. Examples of such a search can be seen in Figure 5. The image in the top-left corner is the query image whereas the rest of the images are the top 19 most similar images in the database comprising 58,107 images. The most common characteristics of the similar images appear to be yellow writing on the core. However, the geological feature in the square tile, a vein, is the feature of interest. Some of the most similar images do not contain any vein at all and exhibit varying rock texture and colour.

Figure 5. Top right image in each of the three sets is the same queried image. Left: most-similar images using raw tiles. Middle: most-similar images after accounting for non-geological features. Right: as with middle, showing the inpainted tiles.

The same query has also been done using inpainted core image tiles, where we see (Figure 5) that most similar images contain similar veins in a similar host rock, compared to the raw image similarity search. This shows how geological similarity searches using character from imagery can be improved by employing the described processes that infer obfuscated geological information.

Figure 6 compares the core rows that highly similar image tiles come from, before and after applying the described methodology. It is shown that the methodology improves the overall geological accuracy of the similarity search.

Figure 6. A: The row from which the queried tile originates. The query tile is highlighted in red. B: three highly similar images and associated rows from the naive similarity search. C: three highly similar images and associated rows from the similarity search described in the methodology. The nature of the veins and host-rock are much more apparent when applying the methodology. The last row shows that the model has found the preceding tile to the query, without inherent spatial context.

Conclusions

The proposed methodology aimed to minimise the effect of non-geological artifacts on drill core imagery in the context of applied computer vision modelling. The two-stage approach first identifies features to remove, then infer geological information using partial convolutions. The results show that the methodology alleviates the effects of artefacts on image similarity search when using image features from a pretrained convolutional neural network.

The performance of the methodology is predominantly affected by the accuracy of the segmentation model identifying the pixels with artefact. Our trials on photo libraries from different deposits with varying image quality showed that the trained model can perform the task. However, it performs poorer if the quality of the photos and the existing artefacts are substantially different to those with which the model was initially trained.

Acknowledgements

We would like to thank Fosterville Gold Mine for permission to publish this paper using their core imagery. We would also like to acknowledge Datarock for providing analysis-ready processed imagery and the compute resources required to implement the described methods.

This methodology has parallels to the improving geological mapping by inpainting surface infrastructure artefacts approach, where stable diffusion models are used to learn from surrounding regions and inpaint to approximate the real data beneath the infrastructure artefacts.

References

Dubois, M. K., Bohling, G. C., Chakrabarti, S., 2007, Comparison of four approaches to a rock facies classification problem, Computers & Geosciences, Volume 33, Issue 5, 599-617

Grujic, M., Webb, L., Carmichael, T., 2019, Geophysics and neural networks: learning from computer vision, ASEG Extended Abstracts 2019

He, K., Zhang, X., Ren, S. and Sun, J., 2016. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 770-778

He, K., Gkioxari, G., Dollár, P. and Girshick, R., 2017. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, 2961-2969

Hill, E. June, Mark A. Pearce, and Jessica M. Stromberg. 2021. “Improving Automated Geological Logging of Drill Holes by Incorporating Multiscale Spatial Methods.” Mathematical Geosciences 53(1): 21–53.

Liu, G., Reda, F.A., Shih, K.J., Wang, T.C., Tao, A. and Catanzaro, B., 2018. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), 85-100

Motoyoshi, I., 1999, Texture filling-in and texture segregation revealed by transient masking, Vision Research, Volume 39, Issue 7, 1285-1291.

Paszke, A., Gross, S., Massa F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., Desmaison, A., Kopf, A., Yang, E., DeVito, Z., Raison, M., Tejani, A., Chilamkurthy, S., Steiner, B., Fang, L., Bai, J., and Chintala, S., 2019, PyTorch: An Imperative Style, High-Performance Deep Learning Library, Advances in Neural Information Processing Systems 32, 8024-8035

Ronneberger, O., Fischer, P. and Brox, T., 2015, October. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, 234-241. Springer, Cham.

Smith, L.N., Topin, N., 2019, May. Super-convergence: Very fast training of neural networks using large learning rates. In Artificial Intelligence and Machine Learning for Multi-Domain Operations Applications (Vol. 11006, p. 1100612). International Society for Optics and Photonics.

Ulyanov, D., Vedaldi, A., Lempitsky, V., 2017, Deep Image Prior, arXiv:1711.10925 [cs.CV]